Amazon SageMaker, a fully managed service, enables developers and data scientists to rapidly build, train, and deploy machine learning (ML) models. Tens of thousands of customers, including Intuit, Voodoo, ADP, Cerner, Dow Jones, and Thompson Reuters, use Amazon SageMaker to offload much of the burden from their ML processes. With Amazon SageMaker, you can deploy your ML models to hosted endpoints and get inference results in real time. Easily view endpoint performance metrics in Amazon CloudWatch, enable autoscaling to automatically scale endpoints based on traffic, and update models in production without loss of availability can.

In many cases, such as e-commerce applications, offline model evaluation is not sufficient and you need to A/B test your model in production before making a decision to update your model. Amazon SageMaker makes it easy to conduct his A/B tests on ML models by running multiple production variants on the endpoint. Use the production variant to test ML models trained using different training datasets, algorithms, and ML frameworks, test model behavior on different instance types, or use the above You can do a test that combines everything.

Until now, Amazon SageMaker splits inference traffic between variants based on the share you specify for each variant on the endpoint. This is useful when you need to control how much traffic goes to each variant, but don't need to route requests to a specific variant. For example, if you need to update your model in production and compare it with your existing model by directing some of your traffic to the new model. However, depending on your use case, you may need to handle inference requests on specific models and invoke specific variants. For example, if you want to test and compare how your ML model behaves across different customer segments, and you want all requests from customers in one segment to be processed using a particular variant.

You can now choose which variant handles your inference requests. By specifying the TargetVariant header in each inference request, Amazon SageMaker will process the request with the specified variant.

Amazon Alexa uses Amazon SageMaker to manage various ML workloads. The Amazon Alexa team frequently updates ML models to stay ahead of new security threats. So, before releasing a new model version into production, the team uses Amazon SageMaker's model testing capabilities to test, compare, and determine which version meets their security, privacy, and business needs. I'm here. To learn more about the research our team is doing to protect your security and privacy, see Leveraging Hierarchical Representations for Preserving Privacy and Utility in Text. Please refer to.

Nathanael Teissier, Software Development Manager, Alexa Experiences and Devices Division, said: "Amazon SageMaker's model testing capabilities allowed us to test new versions of our privacy-preserving models. All meet our high standards for customer privacy." The new ability to select opens new possibilities for deployment strategies to A/B test without changing existing configurations."

This post describes how to easily A/B test ML models on Amazon SageMaker by distributing traffic to variants and calling specific variants. The models we test are trained using different training datasets and deployed as production variants on Amazon SageMaker endpoints.

In production ML workflows, data scientists and engineers often perform hyperparameter tuning, training with additional or more recent data. , or improved feature selection. Conducting his A/B testing of new and old models using production traffic is an effective final step in the new model validation process. A/B testing tests different variants of a model and compares the behavior of each variant to each other. If the new version performs better than the existing version, replace the old model.

Amazon SageMaker allows you to test multiple models or model versions using production variants on the same endpoint. Each ProductionVariant identifies an ML model or a deployed resource for hosting the model. To distribute invocation requests to your endpoints across multiple production variants, either distribute traffic for each variant or invoke the variant directly for each request. In the next section, we'll look at both of these ML model testing methods.

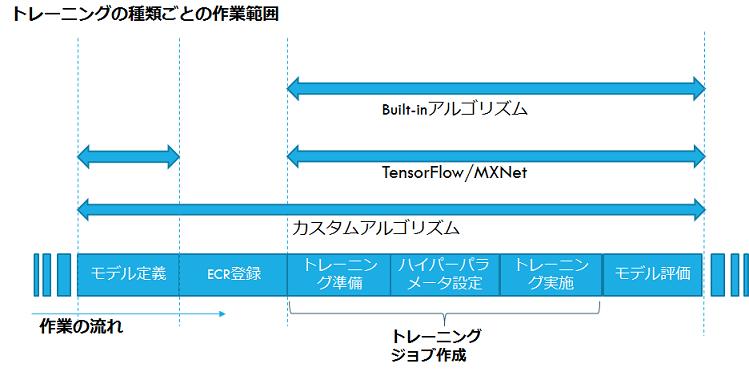

To test multiple models by distributing traffic across multiple models, specify the weight for each production variant in the endpoint configuration, specifying the percentage of traffic to route to each model. Specify Amazon SageMaker distributes traffic among production variants based on the weights you specify. This is the default behavior when using the production variant. The following diagram shows how this works in more detail. Each inference response also contains the name of the variant that handled the request.

To test multiple models by calling a specific model for each request, set the TargetVariant header in the request. If you set a traffic distribution ratio by specifying a weight and you also specify a TargetVariant, targeted routing takes precedence over traffic distribution. The following diagram shows how this works in more detail. For this example, call ProductionVariant3 for the inference request. Additionally, different variants can be called simultaneously for each request.

This article will walk you through an example of how to use this new feature. Using Amazon SageMaker's Jupyter notebook, create an endpoint to host the two models (using ProductionVariant). Both models were trained using Amazon SageMaker built with the XGBoost algorithm on a dataset for mobile carrier churn prediction. For more information on how to train the model, see Customer Churn Prediction with XGBoost. In the following use case, we trained each model on different subsets of the same dataset and used different versions of the XGBoost algorithm for each model.

To try these activities yourself, use the sample A/B Testing with Amazon SageMaker’ Jupyter Notebook. You can run it on either Amazon SageMaker Studio or an Amazon SageMaker notebook instance. The dataset we use is publicly available and is mentioned in "Discovering Knowledge in Data" by Daniel T. Larose. The dataset is attributed by the author to the Machine Learning Dataset Repository at the University of California, Irvine.

The instructions consist of the following steps:

First, define where the model will reside in Amazon Simple Storage Service (Amazon S3). The location defined here will be used when deploying the model in subsequent steps. See the code below.

model_url = f"s3://{path_to_model_1}"model_url2 = f"s3://{path_to_model_2}"Next, the container image and model data Create a model object using Use these model objects to deploy to the production variant of your endpoint. You can develop your model by training it on different datasets, algorithms, ML frameworks, and hyperparameters. See the code below.

from sagemaker.amazon.amazon_estimator import get_image_urimodel_name = f"DEMO-xgb-churn-pred-{datetime.now():%Y-%m-%d-%H-%M-%S} "model_name2 = f"DEMO-xgb-churn-pred2-{datetime.now():%Y-%m-%d-%H-%M-%S}"image_uri = get_image_uri(boto3.Session().region_name , 'xgboost', '0.90-1')image_uri2 = get_image_uri(boto3.Session().region_name, 'xgboost', '0.90-2')sm_session.create_model(name=model_name, role=role, container_defs={ 'Image ': image_uri, 'ModelDataUrl': model_url})sm_session.create_model(name=model_name2, role=role, container_defs={ 'Image': image_uri2, 'ModelDataUrl': model_url2}) Create two production variants, each with its own model and resource requirements (type and number of instances). Set the initial_weight of both variants to 0.5 to divide the submitted requests evenly between the variants. See the code below.

from sagemaker.session import production_variantvariant1 = production_variant(model_name=model_name,instance_type="ml.m5.xlarge",initial_instance_count=1,variant_name='Variant1',initial_weight=0.5)variant2 = production_variant(model_name=model_name2 ,instance_type="ml.m5.xlarge",initial_instance_count=1,variant_name='Variant2',initial_weight=0.5)Use the following code to load the production instance into the Amazon SageMaker endpoint. Deploy the production variant.

endpoint_name = f"DEMO-xgb-churn-pred-{datetime.now():%Y-%m-%d-%H-%M-%S}"print(f"EndpointName= {endpoint_name}")sm_session.endpoint_from_production_variants( name=endpoint_name, production_variants=[variant1, variant2])Now you can get the data from this You can send to endpoints and get inferences in real time. For this post, we will use both approaches to testing models supported by Amazon SageMaker: how to distribute traffic to variants and how to call specific variants.

Amazon SageMaker distributes traffic among the production variants of your endpoint based on the individual weights you set in the previous variant definition. Use the following code to call the end code.

# get a subset of test data for a quick test!tail -120 test_data/test-dataset-input-cols.csv > test_data/test_sample_tail_input_cols.csvprint(f"Sending test traffic to the endpoint {endpoint_name }. \nPlease wait...")with open('test_data/test_sample_tail_input_cols.csv', 'r') as f: for row in f:print(".", end="", flush=True)payload = row.rstrip('\n') sm_runtime.invoke_endpoint(EndpointName=endpoint_name,ContentType="text/csv",Body=payload)time.sleep(0.5)print("Done!")Amazon SageMaker outputs metrics such as latency and number of calls for each variant in Amazon CloudWatch. For a full list of endpoint metrics, see Monitor Amazon SageMaker with Amazon CloudWatch. You can query Amazon CloudWatch to get the number of calls per variant and see how the default calls are split across variants. The result should look something like the following graph.

The following use cases use the new Amazon SageMaker variants feature to call a specific variant. To do this, use a new parameter to define which ProductionVariant to call. The following code calls Variant1 for every request. The same process can be used for calling other variants.

print(f"Sending test traffic to the endpoint {endpoint_name}. \nPlease wait...")with open('test_data/test_sample_tail_input_cols.csv', 'r') as f: for row in f :print(".", end="", flush=True)payload = row.rstrip('\n')sm_runtime.invoke_endpoint(EndpointName=endpoint_name,ContentType="text/csv",Body=payload,TargetVariant=" Variant1") # To verify that all new calls are processed in Variant1, query CloudWatch to see the calls per variant Get the number of The following graph shows that Variant1 served all requests in the most recent call (with the latest timestamp). There was no call to Variant2.

The following graphs evaluate accuracy, precision, recall, F1 score, and ROC/AUC for Variant1.

The following graph evaluates the same metric for inference with Variant2.

Variant2 performs well for most of the predefined metrics, so you'll likely choose it to improve your service for production inference traffic.

Now that Variant2 outperforms Variant1 Now that we know, let's drive more traffic to Variant2.

You can still use TargetVariant to call the selected variant. A simpler alternative is to update the weights assigned to each variant using UpdateEndpointWeightsAndCapacities. This allows you to change the distribution of traffic to your production variant without having to update your endpoints.

Consider a scenario where you specify variant weights to split traffic 50/50 when creating a model and configuring endpoints. The CloudWatch metric below for total calls for each variant shows the call pattern for each variant.

To shift 75% of the traffic to Variant2, assign new weights to each variant using UpdateEndpointWeightsAndCapacities. See the code below.

sm.update_endpoint_weights_and_capacities( EndpointName=endpoint_name, DesiredWeightsAndCapacities=[{"DesiredWeight": 0.25,"VariantName": variant1["VariantName"]},{"DesiredWeight": 0.75,"VariantName": variant2[" VariantName"]} ])This causes Amazon SageMaker to send 75% of instance requests to Variant2 and the remaining 25% to Variant1 .

In the CloudWatch metric below for the total number of calls for each variant, Variant2 has a higher number of calls than Variant1.

If you continue to monitor your metrics and are satisfied with your variant's performance, you can route 100% of your traffic. For this use case, we used UpdateEndpointWeightsAndCapacities to update the traffic quota for the variant. The weight for Variant1 is set to 0.0 and the weight for Variant2 is set to 1.0. So Amazon SageMaker sends 100% of all instance requests to Variant2. See the code below.

sm.update_endpoint_weights_and_capacities( EndpointName=endpoint_name, DesiredWeightsAndCapacities=[{"DesiredWeight": 0.0,"VariantName": variant1["VariantName"]},{"DesiredWeight": 1.0,"VariantName": variant2[" VariantName"]} ])The CloudWatch metric below for the total number of invocations for each variant indicates that Variant2 is processing all inference requests and < It shows that no inference requests are being processed by code>Variant1.

Now you can safely update the endpoint and remove Variant1 from the endpoint. You can also continue to test new models in production by adding new variants to your endpoint and repeating the same steps.

Amazon SageMaker makes it easy to A/B test ML models in production by running multiple production variants on the endpoint. increase. Use SageMaker's capabilities to test models trained using different training datasets, hyperparameters, algorithms, or ML frameworks, or to test model behavior on different instance types. , or a combination of all of the above. Amazon SageMaker split inference traffic between variants based on user-specified shares for each variant on the endpoint. Now, when testing a model for a specific customer segment, you can specify the variant that handles the inference request using the TargetVariant header. This will cause Amazon SageMaker to route the variant to the specified request. For more information about A/B testing, see AWS Developer Guide: Test models in production.

Kieran Kavanagh is a Principal Solutions Architect at Amazon Web Services. In addition to working with customers, he designs and builds AWS technical solutions and has a strong interest in machine learning. In his spare time, he enjoys hiking, snowboarding, and practicing martial arts.

Aakash Pydi is a Software Development Engineer on the Amazon SageMaker team. He is passionate about helping developers make their machine learning workflows efficient and productive. In his spare time, he enjoys reading (science fiction, economics, history, philosophy), playing games (real-time strategy), and spending time chatting.

David Nigenda is a Software Development Engineer on the Amazon SageMaker team. His current focus is on providing useful insight into production machine learning workflows. In my spare time, I play with my children.