Amazon SageMaker recently launched support for local training using pre-built TensorFlow and MXNet containers. Amazon SageMaker is a flexible machine learning platform that enables you to more effectively build, train, and deploy machine learning models into production. Amazon SageMaker training environments are managed. This means users don't have to think about things like spinning up on the fly, loading algorithm containers, retrieving data from Amazon S3, running code, outputting results to Amazon S3, tearing down clusters, and so on. The ability to offload training to separate multi-node GPU clusters is a big advantage. Spinning up new hardware each time is good for repeatability and security, but it's a wasteful spin when testing and debugging algorithm code.

By using the Amazon SageMaker deep learning container, you can write TensorFlow or MXNet scripts as you normally would. But going forward, we'll be deploying these as pre-built containers in managed, production-grade environments for both training and hosting purposes. Until now, these containers were only available in Amazon SageMaker-specific environments. These containers have recently been open sourced. This allows users to bring containers into their working environments and use custom code builds with the Amazon SageMaker Python SDK by rewriting just one line of code to test their algorithms locally. . This means you can iterate and test your work without waiting for a new training or hosting cluster to be built each time. It is a common technique in machine learning to iterate a sample of a small dataset locally and scale it for distributed training on the full dataset. I just hope it doesn't introduce bugs, because in many cases you'll end up rewriting the whole process. Amazon SageMaker's local mode lets you seamlessly switch between local and distributed managed training with a single line of code rewrite. All other behavior is the same.

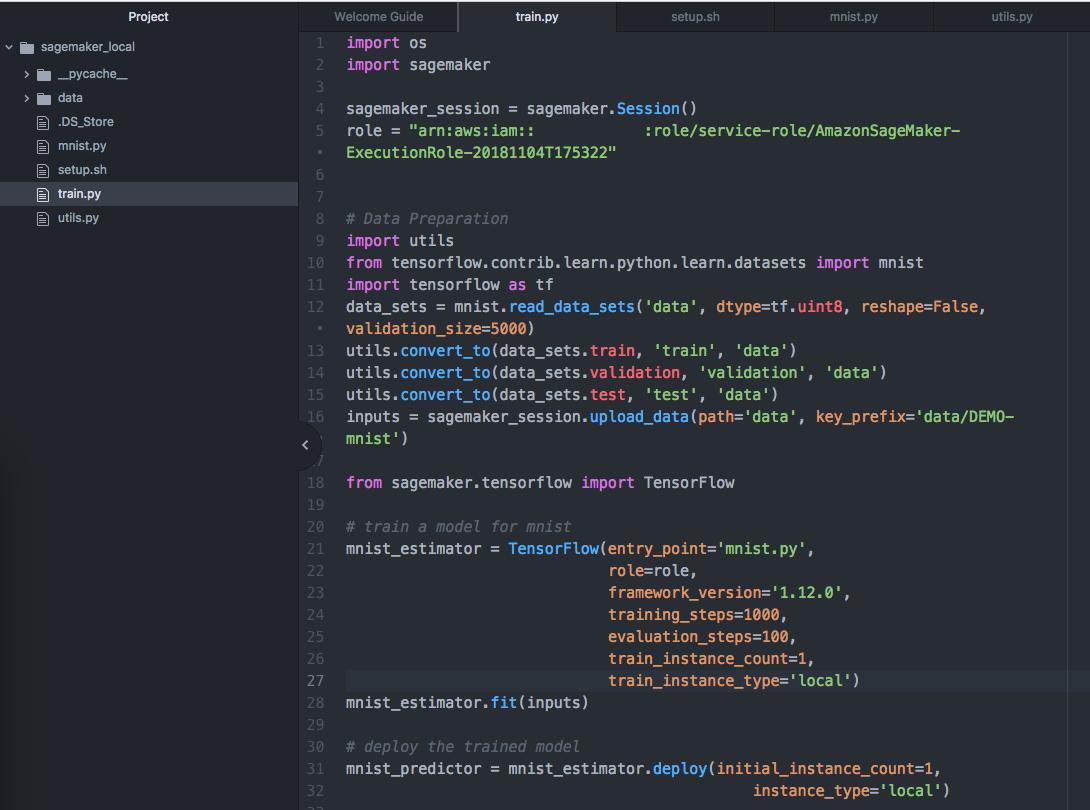

The Amazon SageMaker Python SDK's local mode can run emulated CPU (single and multi-instance) and GPU (single-instance) SageMaker training jobs by changing one argument in the TensorFlow or MXNet estimator. To do this, we will use Docker configuration and NVIDIA Docker. You will also need access to public Amazon ECR repositories from your local environment to pull Amazon SageMaker TensorFlow or MXNet containers from Amazon ECS. Using a SageMaker notebook instance as your local environment, this script will install the necessary requirements. If not, you can install them yourself and have them upgraded to the latest version of the SageMaker Python SDK using: pip install -U sagemaker.

We have provided two example notebooks, one for TensorFlow and one for MXNet, that demonstrate how to use local mode with MNIST. However, Amazon SageMaker also just announced the availability of larger notebook instance types, so let's try a larger example of training an image separator for 50,000 color images on 4 GPUs. We train a ResNet network using MXNet Gluon on the CIFAR-10 dataset and run the whole thing on a single ml.p3.8xlarge notebook instance. If you want to run this on multiple machines, or train on an iterative basis without managing hardware, you can easily migrate the same code you build here to an Amazon SageMaker managed training environment.

Let's start by creating a new notebook instance. Log in to the AWS Management Console and select the Amazon SageMaker service. Then select Create notebook instance in the Amazon SageMaker console to open the following page.

Once the notebook instance is started, create a new Jupyter notebook and start setting it up. Alternatively, you can use a predefined notebook here. In order to concentrate on local mode, we skip some background information. If you are new to deep learning, this blog post might be a good introduction. Or check out our other SageMaker sample notebooks. This sample falls under ResNet for CIFAR-10, but is trained in the Amazon SageMaker managed training environment.

With the prerequisites installed, the library loaded, and the dataset downloaded, load the dataset into an Amazon S3 bucket. Note that we are running the training locally, but will access the data in Amazon S3 for consistency with the SageMaker training.

inputs = sagemaker_session.upload_data(path='data', key_prefix='data/DEMO-gluon-cifar10')Next, define the MXNet estimator. increase. The estimator points to the cifar10.py script. This script contains the network specification and the train() function. It also provides information about the job such as hyperparameters and IAM roles. But the most important thing is to set train_instance_type to 'local_gpu'. That's all it takes to switch the entire training from your SageMaker managed training environment to your local notebook instance.

m = MXNet('cifar10.py', role=role, train_instance_count=1, train_instance_type='local_gpu', hyperparameters={'batch_size': 1024,'epochs': 50,'learning_rate': 0.1 ,'momentum': 0.9})m.fit(inputs)The first time the estimator runs, it needs to download a container image from the Amazon ECR repository, but the training You can get started in no time. No need to wait for another training cluster to be provisioned. Also, re-execution may be required when iterating or testing. Changes to MXNet or TensorFlow scripts start instantly.

Training in local mode also takes advantage of the hardware we're using, so we can easily monitor metrics like GPU consumption to ensure our code is well written. In this case, you can check this by running nvidia-smi in a terminal that uses all of the ml.p3.8xlarge GPU for very simple training of a ResNet model.

After you finish training the estimator, you can create and test the endpoint locally. Set 'local_gpu' to instance_type again.

predictor = m.deploy(initial_instance_count=1, instance_type='local_gpu')To make sure the inference code works now, run some You can make predictions. This is preferably done before deploying to a production endpoint, but you can also make some predictions for a one-off assessment of model accuracy.

from cifar10_utils import read_imagesfilenames = ['images/airplane1.png', 'images/automobile1.png', 'images/bird1.png', 'images/cat1.png', 'images/deer1.png' ', 'images/dog1.png', 'images/frog1.png', 'images/horse1.png', 'images/ship1.png', 'images/truck1.png']image_data = read_images(filenames)for i , img in enumerate(image_data): response = predictor.predict(img) print('image {}: class: {}'.format(i, int(response)))The result should look like this:

image 0: class: 0image 1: class: 9image 2: class: 2image 3: class: 3image 4: class: 4image 5: class: 5image 6: class: 6image 7: class: 7image 8: class: 8image 9 : class: 9

Now that you have evaluated your training and inference scripts, you can also deploy them on a SageMaker managed environment on a large scale training or iterative basis and make predictions from a real-time host endpoint.

But first let's clean up the local endpoint. This is because only one endpoint can be running locally at a time.

m.delete_endpoint()You can shut down your notebook instance from the Notebook page and select Stop to delete the Amazon You can shut down your notebook instance from the SageMaker console. Doing so prevents incurring compute charges until the user initiates the backup. Alternatively, you can delete the notebook instance by choosing Actions and Delete.

This blog post demonstrates how to use the Amazon SageMaker Python SDK local mode on the recently released multi-GPU notebook instance to quickly test large-scale image classification models. Introducing. Starting today, you can use local mode training to accelerate your testing and debugging cycles. Just make sure you have the latest version of the SageMaker Python SDK, install other tools, and change one line of code.

David Arpin is an AI Platforms Selection Leader at AWS with a background in leading data science teams and product management.